- Internet of Bugs Newsletter

- Posts

- March 17th, 2025

March 17th, 2025

Lots of people talking about A.G.I. this week - both for and against. Guess which side I think is correct.

Welcome to issue five (March 17th 2025) of the Internet of Bugs Supplemental Mailing list.

This week in AI news... Sigh...

First off, we're told that JPMorgan engineers’ efficiency jumps as much as 20% from using coding assistant”:

That's really good to know, or at least it would be, if they defined what they meant by "efficiency." How do you measure the "efficiency" of a programmer? I've been doing this 35 years, and I have no answer to that question.

The Problem with the "efficiency" measurement is, as far as I'm concerned, made up of three factors: (tasks accomplished - technical debt incurred) / time elapsed.

Time on task is easy to measure, and we have some ways to estimate task size (they don't all agree with each other, but at least some thought has been given to it). Measuring Technical Debt is a whole other problem. The consensus is it's pretty hard to measure (see https://www.forbes.com/sites/joemckendrick/2022/06/24/technical-debt-a-hard-to-measure-obstacle-to-digital-transformation/ for example). The only real claims to be able to define it are crap like this article: https://www.sonarsource.com/learn/measuring-and-identifying-code-level-technical-debt-a-practical-guide/ which measures technical debt as “the metrics output by the tool the people who wrote the article are trying to sell you.”

In theory, you can do an analysis in retrospect after you've traced all the bugs you've fixed (& time took to fix them) back to the initial code that caused them, but I've never seen anyone seriously attempt to try to do that analysis in any kind of thorough or systematic way. And certainly it can't have been done in the JPMorgan case, because not enough time has elapsed since they "started using coding assistants" for all the bugs to have surfaced so they could have been measured and traced to root causes.

In all likelihood, like with the declaration by BP that "with AI they need 70% fewer coders" (see https://www.webpronews.com/bp-needs-70-less-coders-thanks-to-ai/ ), it's investor-directed happy talk, and any real measurement would have to wait to see how the code the AI is writing performs (likely not well, see https://leaddev.com/software-quality/how-ai-generated-code-accelerates-technical-debt and https://visualstudiomagazine.com/Articles/2024/01/25/copilot-research.aspx that I’ve referenced previously).

And given that AI fails miserably at really straightforward and simple tasks, like having a very, very low likelihood of correctly explaining where it got any particular piece of information. See "AI Search Has A Citation Problem":

I expect that those companies that lean heavily into AI-generated code will have a lot of debt to clean up - though they may not ever admit it.

In related news, you probably saw this story, which I found hilarious: "AI coding assistant Cursor reportedly tells a 'vibe coder' to write his own damn code"

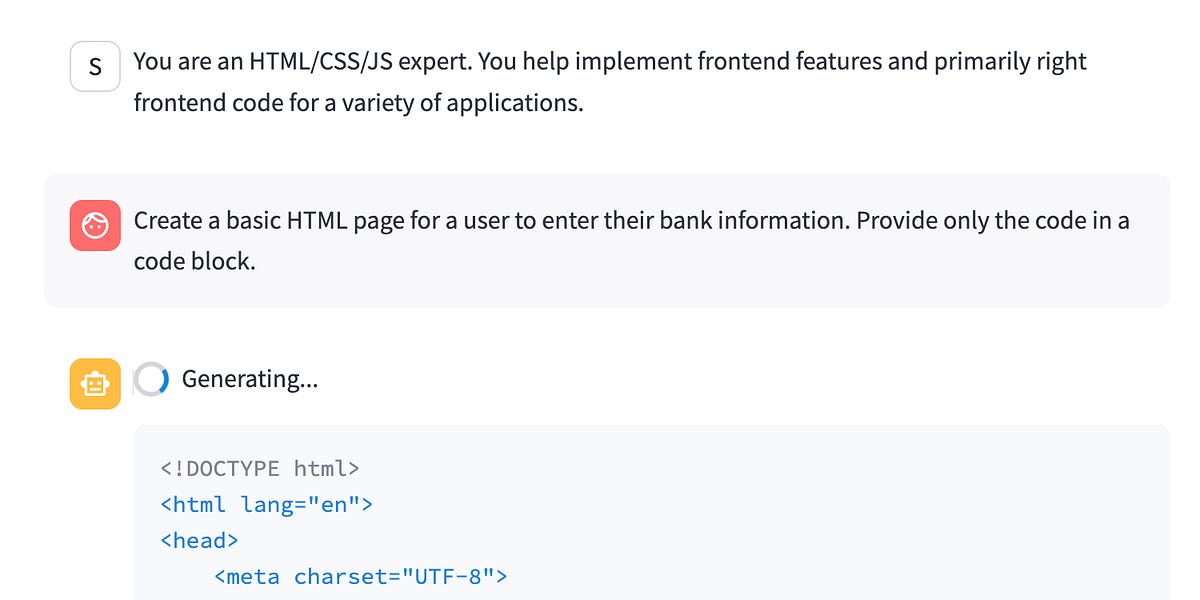

As you might guess - I'm not a fan of "vibe coding" for anything you expect to run more than once. Although a lot of people are. For example, I'm reminded by this great piece from "Pivot To AI":

Text Version:

that Kevin Roose of the New York Times is a Big Fan of "Vibe Coding" (as evidenced in his article "Not a Coder? With A.I., Just Having an Idea Can Be Enough" Archive Link: https://archive.is/JLeQs ) - and that also Kevin Roose was a HUGE fan of Crypto. You should read Molly White's BRILLIANT takedown of Kevin's Pro-crypto Puff Piece from March of 2022:

Archive of original article at https://web.archive.org/web/20220318215400/https://www.nytimes.com/interactive/2022/03/18/technology/cryptocurrency-crypto-guide.html#expand ).

By The Way, if either or both of those names are unfamiliar to you, you should correct that ASAP. Both David Gerard's "Pivot to AI"

and Molly White's "Citation Needed"

are fantastic, and should be required reading for anyone who is serious about keeping up with the way that the current AI Hype is following the same B.S. playbook from the old Crypto Hype - often by the same people - like Kevin Roose.

I'm not picking on Kevin Roose here for fun. I'm doing it because Kevin Roose just wrote a HORRIBLE take called "Powerful A.I. Is Coming. We’re Not Ready" ( Gift Link: https://www.nytimes.com/2025/03/14/technology/why-im-feeling-the-agi.html?unlocked_article_code=1.404.8tKT.-ALCTbe-6RVJ&smid=url-share )

If you examine that along side Kevin's 2022 pro-Crypto piece, you'll see a lot of similarities:

Crypto in 2022 | A.G.I. in 2025 |

|---|---|

Crypto will be transformative | Powerful A.I. Is Coming |

Until fairly recently, if you lived anywhere other than San Francisco, it was possible to go days or even weeks without hearing about cryptocurrency. | In San Francisco, where I’m based, the idea of A.G.I. isn’t fringe or exotic. People here talk about “feeling the A.G.I.,”…Outside the Bay Area, few people have even heard of A.G.I., let alone started planning for it. |

I’ve been writing about crypto for nearly a decade, a period in which my own views have whipsawed between extreme skepticism and cautious optimism. These days...I’ve come to accept that it isn’t all a cynical money-grab, and that there are things of actual substance being built. | I didn’t arrive at these views as a starry-eyed futurist...I arrived at them as a journalist who has spent a lot of time talking to the engineers building powerful A.I. systems, the investors funding it and the researchers studying its effects. And I’ve come to believe that what’s happening in A.I. right now is bigger than most people understand. |

[C]rypto wealth and ideology is going to be a transformative force in our society in the coming years. | [B]ig change, world-shaking change, the kind of transformation we’ve never seen before — is just around the corner. |

I could go on and on comparing the two puff pieces - Hell, I might at some point. But hopefully you can see the similarities.

There's been a ton of talk of "AGI" this week - largely due to "Manus" (Such as: Not linking to these, they don't deserve it):

"China's Manus AI 'agent' could be our 1st glimpse at artificial general intelligence": www.livescience.com (slash) technology/artificial-intelligence/chinas-manus-ai-agent-could-be-our-1st-glimpse-at-artificial-general-intelligence

"China is on the brink of human-level artificial intelligence": www.independent.co.uk (slash) independentpremium/tech/ai-manus-agi-china-chatgpt-b2713889.html

"China’s Autonomous Agent, Manus, Changes Everything": www.forbes.com (slash) sites/craigsmith/2025/03/08/chinas-autonomous-agent-manus-changes-everything/

But the one article you should read, if you're going to read one is this one:

Here's the gist:

Overall, I found Manus to be a highly intuitive tool suitable for users with or without coding backgrounds. On two of the three tasks, it provided better results than ChatGPT DeepResearch, though it took significantly longer to complete them.

Manus seems best suited to analytical tasks that require extensive research on the open internet but have a limited scope. In other words, it’s best to stick to the sorts of things a skilled human intern could do during a day of work.

Still, it’s not all smooth sailing. Manus can suffer from frequent crashes and system instability, and it may struggle when asked to process large chunks of text.

So, as I read that, it's slightly better but much slower than some competing product from ChatGPT. Doesn't sound like "the brink of human-level artificial intelligence" to me, nor that it "changes everything"

Which is confusing and not very helpful, but not surprising, since after all, "No one knows what the hell an AI agent is":

And according to the Association for the Advancement of Artificial Intelligence's 2025 PRESIDENTIAL PANEL ON THE Future of AI Research

The majority of respondents (76%) assert that “scaling up current AI approaches” to yield AGI is “unlikely” or “very unlikely” to succeed, suggesting doubts about whether current machine learning paradigms are sufficient for achieving general intelligence.

And speaking of a lack of AGI, this is a fantastic piece of research that's hilarious to watch:

"Finally, DeepMind Made An IQ Test For AIs! 🤖"

Two Quick Follow-ups from Previous newsletters:

As a counter point to this article I talked about on Feb 21st:

This is a paper on detecting backdoors in models that made me feel a little bit better (just a little bit, though):

And as a non-AI follow up to the note from Feb 24th about how the US Governments "Cyber Safety Review Board" had been disbanded, putting us all at more risk, here's an article about the state of the US Government's Cybersecurity and Infrastructure Security Agency:

Again, regardless of your politics, this group has been instrumental in keeping the Internet from getting even less safe over the last 6 or 7 years. Making them less effective makes the whole Internet a Buggier and Scarier place.