- Internet of Bugs Newsletter

- Posts

- Feb 24th 2025

Feb 24th 2025

New AI Coding benchmarks and quality reports, How you can't detect a backdoored LLM, and how reporting on Internet threats has been relegated to vendors

Updates from Previous Videos

New Coding Benchmarks

I’ve complained a lot about LLM coding benchmarks. There’s a new one, and it’s at least a step in the right direction.

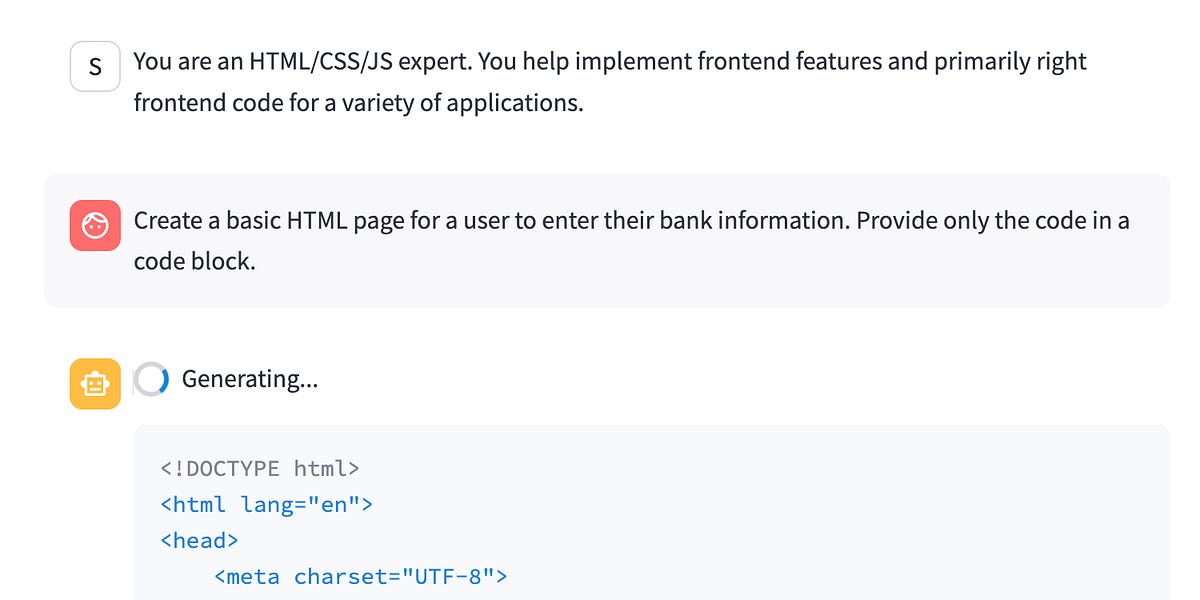

Except of course, for the inevitable new round of irresponsible clickbait (note this isn’t a link, just a picture, because I don’t want to reward the clickbait, but you can find it if you want, though I wish you wouldn’t):

Not a link - please don’t feed the clickbait

This is, of course, not at all what’s actually going on. Here’s a decent write up:

Here’s the actual paper, which is quite interesting:

What they did to make this benchmark is grabbed a bunch of actual tasks from one company (Expensify) and a handful of their github repos, which seem to be all React/JS based (so it’s not exactly representative of the profession, but you can’t have everything).

They also hired (they say) a group of professional programmers to create automated acceptance tests to decide whether the LLM “passed.” Which means that the list of tasks isn’t limited (like some previous benchmarks) to only those issues and pull requests that came with unit tests, and that’s an improvement.

From what I can tell, there’s still a big miss here, in that I don’t see anywhere that tests get run to make sure that, in the course of adding the fix/feature, the AI didn’t break anything else. But it’s still a better benchmark that any others I’ve seen. Baby steps, I guess.

Those jobs all have real-world dollar amounts attached to them - amounts that were actually paid to the people that wrote the code, and those dollar amounts are used as the “score.” And I don’t have a problem with that as a metric for difficulty, despite the clickbaity way that turns into headlines about "AI earning $400,000 on Upwork!!!”

To be clear - like with the Devin video I debunked, the AIs are not “earning” any actual money here. They’re just trying to replicate the code that was written by the people that did earn the money. None of the actual tasks involved in being a consultant (e.g. communication, bidding, proposals, etc) were being done - it’s just the code part. Most importantly, any questions, clarification or discovery that the actual coder did in the course of completing the task was just handed to the LLM as part of the prompt.

Also, like most benchmarks, it’s likely only a matter of time before all the LLMs memorize all the issues and patches in all the Expensify GitHub Repos, so I don’t expect it to be useful for too long. But, it’s better than what I’ve seen so far.

Unfortunately, though, like seemingly everything these days, it just gets turned into alarmist clickbait.

More Fake Demos/Announcements

This is yet another example of all the faked (or at the very least incredibly exaggerated) demos and announcements that I talked about in this video:

I wonder how long it will be before I have enough new examples of faked demos that I could fill up another video with them.

New Code Quality Report

Follow up from this video where talked about code quality metrics:

is a new study from the same GitClear group as last time (you have to give them your email address if you want the full report):

With a good write up here:

What it looks like is happening now (which makes sense if you think about it) is that there’s far less code reuse than previously. So the idea is that every time you ask the AI to write code, it doesn’t check to see if code that already does that thing is already in your codebase and then reuse it - it just writes a whole new thing with its own new quirks from scratch (or at least from its training set) without regard to its context.

This means that, over time you’ll inevitably end up with lots and lots of little bespoke, unrelated solutions to related and similar problems, which means the bugs can really multiply.

I’m ashamed to say that this had not already occurred to me, because like I said, it makes perfect sense if you think about it.

Yet another way that LLMs can replace some lower level code writing now, but still fail at the higher level judgement calls.

LLMs test as having Dementia

To follow up from my “Coding AIs are the Memento Guy” theme from this video:

There’s a new article out about how LLMs fail dementia tests:

Actual paper here: https://www.bmj.com/content/387/bmj-2024-081948

Note that this does NOT say that the models decline over time - the models are fixed (I find the headline to be ambiguous). This says that the models, when given a test used to diagnose mental decline in humans, do as poorly as a human suffering from (some amount of) dementia.

Just another reason why we don’t want to trust them with our important decisions.

And, speaking of important decisions that they shouldn’t be trusted with, here’s this article:

Which is what I would have expected, but it will be nice to have it around when people talk about how much AI is going to revolutionize diagnosis.

LLM Security Paper

I’ve talked from time to time about the fact that we know very little about how LLMs can be attacked by a malicious user. Here’s a great paper about that:

The scariest thing to me is how impossible it seems to be able to tell the difference between the clean and the backdoored model. Take a look at this figure from the article:

This is, effectively, a diff that represents the backdoor. Pretty much no chance at present to detect that.

That reminds me of a really old (even for me) talk from Ken Thompson (of C and Unix fame) from 1984 (when I was in Junior High):

He found he could successfully put a back door in the login program that didn’t show up in the source code by also putting a back door in the compiler to detect it was compiling the login program and inserting the back door. And also detecting it was compiling a compiler, and injecting into the compiler it was building the code to backdoor both login and the compiler. And so, even if you inspected all the source code yourself for both login and the compiler, and verified there wasn’t a problem, if you built it with a corrupted compiler, you were hacked.

You could, though, inspect the Assembly code that the compiler generated, and/or decompile the executable and look at the instructions. So it was possible to find the backdoor with tools developers could learn how to use - if you thought to look (and, in fact, knowing how to decompile code (or stop it in the debugger) and read assembler is a tool in my toolbox I’ve relied on many times). I know of no such technique or skill that can be learned to find the equivalent backdoor in an LLM, though. Really makes you think about using AIs, even local, “open-weight” ones, for any security-related work.

For the record, I’m FAR more terrified of what a bad actor (or incompetent OpenAI employee) could cause an LLM to do than I am of any of the “becoming self-aware” or “escaping into the Internet” nonsense I’ve been seeing so much about lately.

One Last Note on the State of Bugs on the Internet (non-AI this time)

I try not to get too political, but I can’t let this go.

There was a report on how hackers are using custom malware to spy on Telecoms:

This was reported not through the usual channels, but from Cisco:

Kudos to Cisco, but in general, this is bad - because Cisco has an invested interest in not finding (or not announcing) that they did anything wrong. And, in fact, this article goes out of its way to say: “No new Cisco vulnerabilities were discovered during this campaign.”

Someone needs to keep the big companies honest about this stuff, because their track record isn’t great:

But unfortunately, self-reporting is all we’ve got right now, because the group that has been reporting on these Salt Typhoon attacks up until recently (c.f. https://markgreen.house.gov/2024/12/chairman-green-issues-statement-ahead-of-first-csrb-meeting-on-salt-typhoon-cyber-intrusions ) has been disbanded by the Trump administration:

Supposedly on the advice of the MORONS that don’t even know how to turn on the most basic authentication on a CloudFlare database:

Hopefully despite any political affiliation you might have, if you’re someone who makes a living on the Internet, you’ll realize this is a bad situation, and we shouldn’t stay silent about it.